A version of this article was first published on ExchangeWire

North star KPIs deserve north star measurement

DAU, Revenue, Margins – These are the goals around which entire companies align their teams, from product to engineering to sales. For marketing teams, this means juggling both long-term and short-term investments to ensure growth in both demand generation and capture.

The complexity of this task can be staggering. Marketing activities span the vast landscape of online, offline, owned, earned activities, influencer partnerships and more. Similarly, metrics used to gauge success are equally diverse, encompassing categories like awareness, reach, frequency, attention, engagement and ultimately conversion.

In this environment, the ability to holistically assess and prove the impact of marketing activities on the company’s bottom line becomes crucial. It’s essential not only for defending existing marketing budgets, but also for making a case for their growth. Those who can draw a clear throughline from marketing activities to business outcomes help their CFOs recognise the strategic value of marketing – elevating it beyond simply an operational expense.

Last-click marketers must evolve

The rise of the third party cookie cemented last-click attribution as the default model for measuring marketing success (a practice that unfortunately persists among many of the world’s largest brands). However, the durability of attribution-based measurement is being challenged by an increasing degree of user-level signal loss. Apple has seen more than 70% of users opt out of ATT tracking prompts surrounding the IDFA, and Chrome will soon follow with informed consent on third party cookies.

There is no better time than now for marketers to rise above last-click attribution and explore new, alternative methods – in order to improve user privacy and introduce rigour to measurement frameworks.

In the first article of this series, we explored how Incrementality Experiments, Marketing Mix Models (MMMs) and Data-Driven Attribution (DDA) have evolved and are becoming crucial components of the modern marketer’s toolkit. This article will expand on how marketers should use Incrementality as both the north star for assessing impact and the ground truth for decision making.

Incrementality is the strongest evidence of marketing impact

The strongest proof one can provide is that which demonstrates cause and effect – and incrementality does this best. For marketers, incrementality identifies business results that would not have been achieved without specific marketing activities. In other words: if a company were to withhold $X of budget from its marketing campaigns, what would be the resulting loss in sales?

In performance marketing, incrementality measures the additional conversions, revenue and engagement generated. For brand marketing, it quantifies the incremental gains in awareness, consideration, and/or intent of audiences. As the latter creates halo effects on the former, a holistic approach that measures both brand and performance is essential for marketers with substantial investments in both.

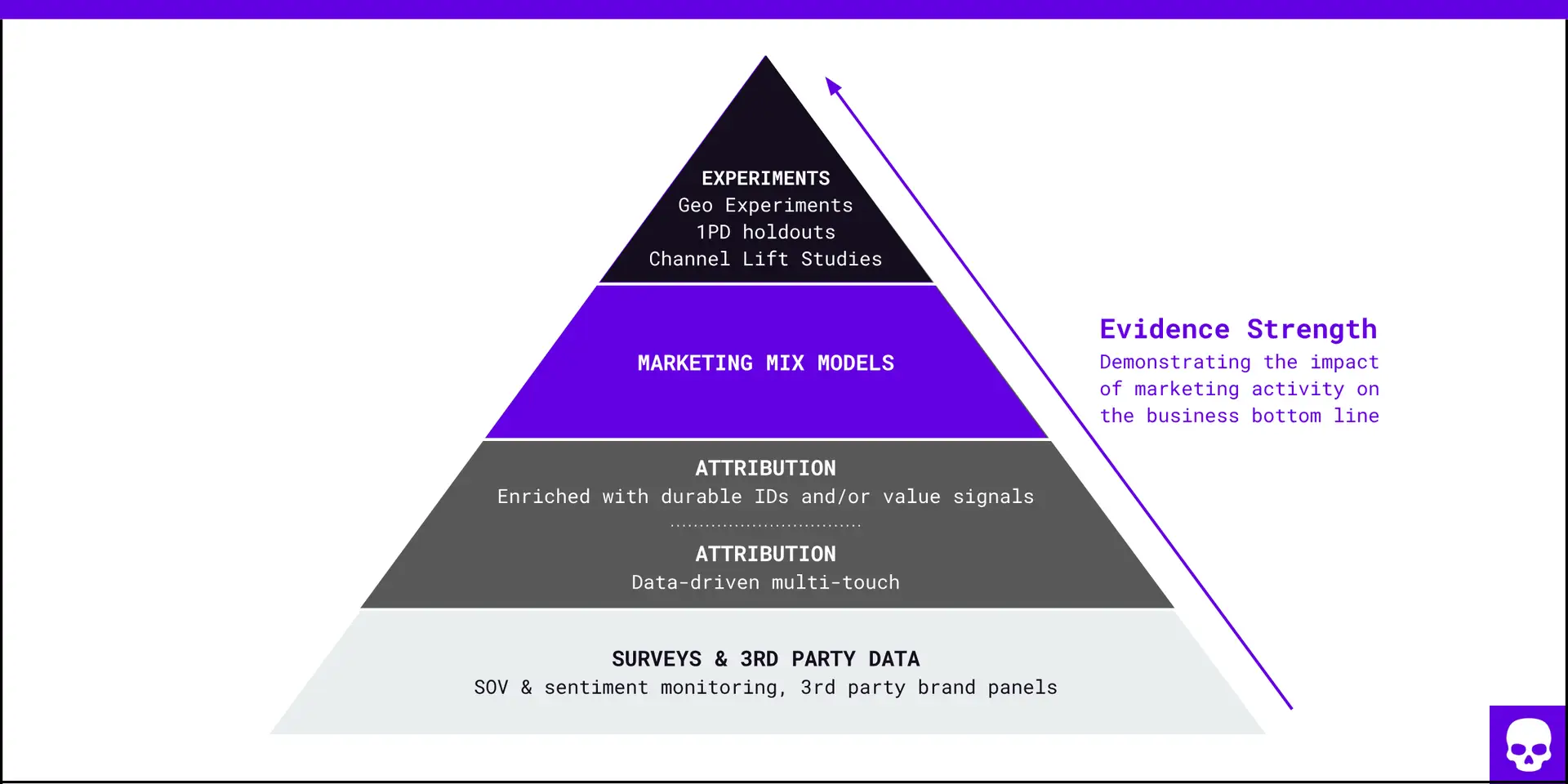

Incrementality experiments are the gold standard for measuring these causal effects (see Figure A). They make use of random controlled trials (RCTs) to evaluate the effect of marketing on treatment and control groups. The results pinpoint whether specific channels, creatives, tactics, or budget allocations drove any incremental impact during the experiment’s duration.

Figure A – Marketing impact evidence pyramid

Experiments alone are individually suboptimal

That said, experiments aren’t without inherent limitations. They require time, sufficient scale to detect significant effects, and they incur the opportunity cost of holding back spend for control groups. Moreover, they don’t account for diminishing returns or the decay effects of marketing campaigns over time.

These constraints mean that we need other, complementary measurement methods to support the full breadth of marketers’ in-flight, tactical, and strategic planning decisions. As such, a balanced approach is needed to attain the end goal of incrementality-first marketing measurement.

This is where marketing mix models (MMMs) and data-driven attribution (DDA) come in. While not considered the gold standard like incrementality experiments, both are able to tease out causal relationships in their own way. MMMs and DDA analyse continuous campaign data and user-level information respectively to provide differentiated, yet valuable insights into marketing effectiveness.

The MMM resurgence and futureproofing of attribution

MMMs provide the widest coverage of marketing activity, encompassing paid, owned, and earned media across both online and offline channels. They can also incorporate experiment results to inform the selection of models that more accurately reflect the incremental impact of different channels. Notably, several startups, from Measured to Recast, are productising MMMs. They deploy media channel APIs to gather campaign data with speed, and offer both proprietary and open source models to enhance measurement rigour and granularity.

Attribution, on the other hand, is being directly impacted by the slow, impending death of the third party cookie. Adtech’s ongoing obsession with replacing it has led to advertiser-side solutions such as server-side tagging, email ID matching and universal IDs – where the end goal is to recover or even increase the total volume of observable conversions and users in cookie-sparse environments. While these solutions will not improve incrementality on their own, they can expand the sample size of user IDs available for experiments (thus reducing its costs) and enrich real-time bidding with more durable and/or valuable data.

Finally, while MMMs and attribution focus on quantitative measures, marketers shouldn’t overlook the value of other insight sources. Metrics such as share of voice and user sentiment offer longitudinal views of brand health and interest, complementing the more direct measures of incrementality. These indicators can inform messaging strategies or even provide additional signals to improve MMMs, though they cannot independently describe the causal impact of specific marketing activities.

The modern measurement toolkit

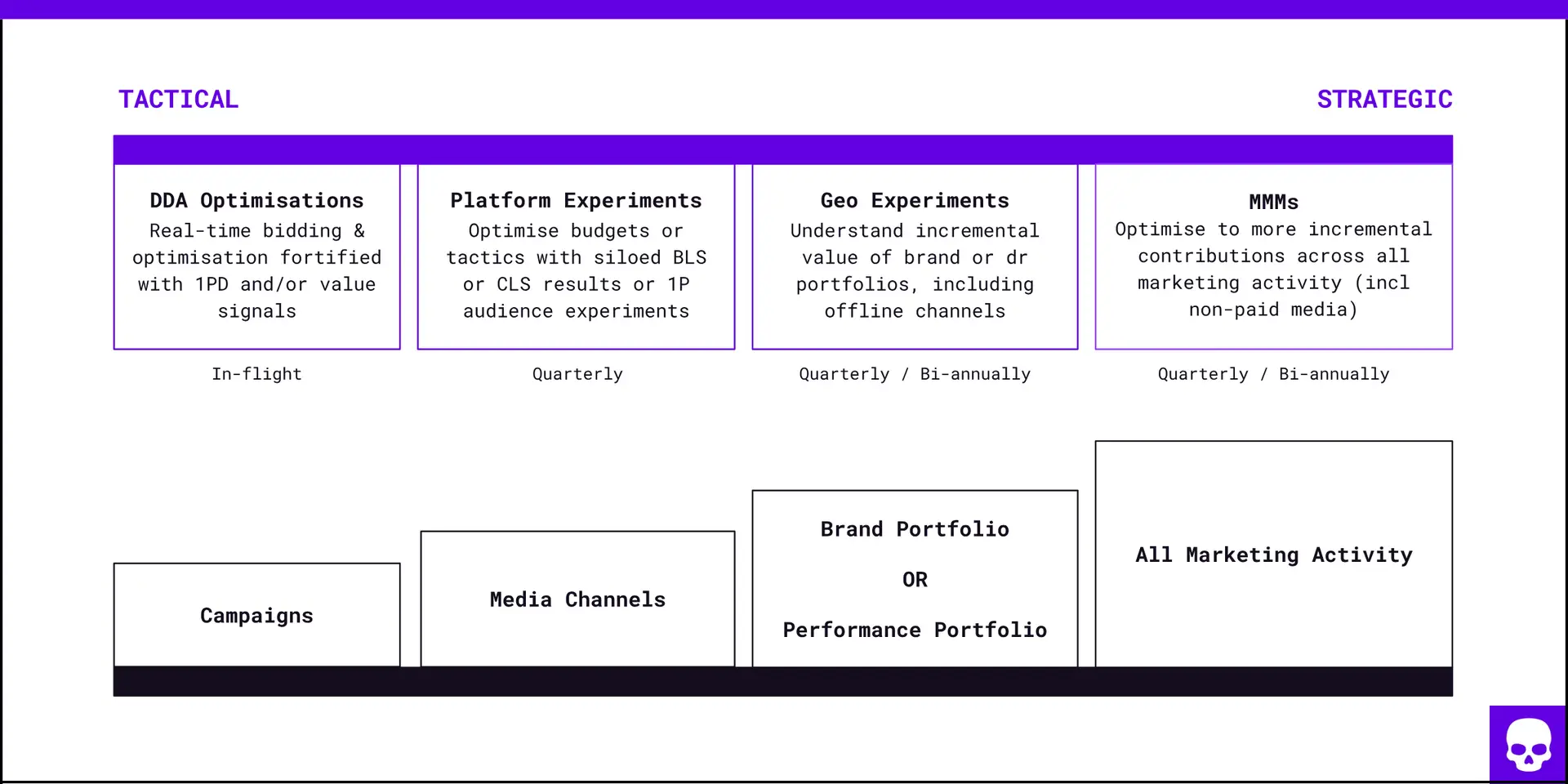

For the marketer to achieve comprehensive channel coverage and actionable insights at both strategic and tactical levels, a balanced measurement framework that leverages multiple methods is required (see Figure B).

Figure B – Marketing continuum measurement framework

The above is an example of how each measurement method plays a part in improving incrementality and coverage across an increasingly complex scope of marketing activities and decisions. We further break them down below.

Strategic goals

- Total sales lift of all performance or brand activity

- Total sales lift of all performance and brand activity

MMMs are most suitable here for advertisers with substantial spends across a diverse set of paid and non-paid channels. They allow you to forecast marginal gains, simulate budget scenarios based on incremental contributions across activities, and understand external effects on the business (e.g. macroeconomics, seasonality, competitor activity). Geo experiments can also complement MMMs by measuring aggregate activities across multiple channels.

Tactical goals

- Brand or conversions lift of individual channels

- Brand or conversions lift of individual tactics

- Conversion lift for existing prospects and customers

Media campaign managers typically use in-platform experiments (e.g. YouTube brand lift studies) or first party data audiences to validate and optimise channel-level activity. While these experiments don’t account for other channels’ contributions, they’re useful for optimising tactics like targeting and creatives.

Persistent testing across quarters can stabilise understanding of incrementality levels. When combined with strategic-level measurement, these insights can inform cross-channel budget reallocations.

In-flight goals

- Bid towards high value impression levers or customer paths that predict brand or conversion lift incrementality

- Test different levers or bidding methods (LTV vs CPA etc.) can be tested using experiments listed above

Identifying truly incremental signals at the impression level is akin to fishing needles from a haystack. While there are now more studies around the causal effects of Ad Attention on brand lift, performance campaigns present much higher entropy given the wider distance between an ad impression and the point of conversion. That said, some data-driven attribution solutions (e.g. Google’s) claim to be able to identify incremental conversion paths and automate bidding decisions.

Incrementality experiments as ground truth

A marketer can make precisely inaccurate decisions. Relying only on last-click attribution (or only one type of measurement) in a multi-channel world ensures that. Precision needs context stemming from ground truth to arrive at accuracy, and incrementality experiments provide that.

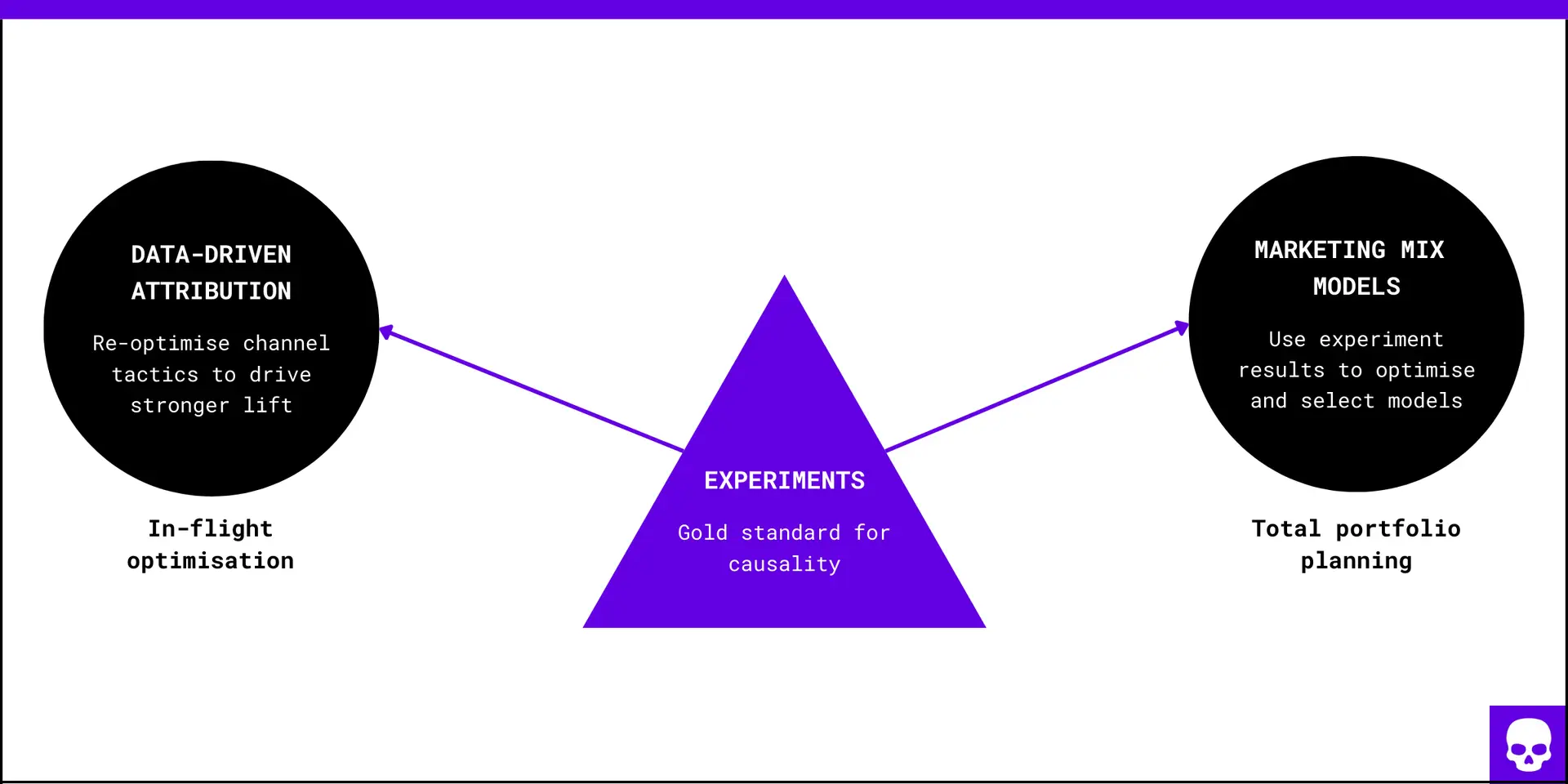

When experiments cover a high percentage of media spend, they start to become a reliable source for validating and/or calibrating other measurement methods (see Figure C).

Figure C – Incrementality experiments for calibration

Calibration extends the domain of incrementality in several ways:

- Enhancing MMM accuracy by integrating experiment results – Modern MMMs, such as Meta’s Robyn and Google’s Meridien, allow analysts to integrate data from incrementality experiments or lower-fidelity sources like industry benchmarks to improve the accuracy of their outputs.

- Optimising campaign tactics that show lower incrementality – While creative A/B testing is the most common use of experiments, marketers can optimise other levers (from targeting to value-based signals) to improve a channel’s overall incrementality.

- Applying incrementality multipliers to tCPA and tROAS bids – Campaign managers can use incrementality multipliers to set relative incremental Cost Per Acquisition (iCPA) or incremental Return on Ad Spend (iROAS) bids across channels. However, this approach can often lead to aggressive targets that “subtract” organic conversions from its calculations. Caution is needed to avoid amplifying the flawed hierarchy of last-click attribution and/or severely destabilising spend and conversion volumes.

While the above calibration types can each be beneficial, there’s another that may drive a marketer to madness: the pursuit of a single source of truth that’s free of contradiction and discrepancy. As we’ve learned, each measurement method draws data from different granularities and activity scopes, using unique counting and/or modelling approaches. This results in natural discrepancies that are not worth the effort to bridge.

Instead of chasing perfect alignment, marketers should recognise the relative strengths and weaknesses of each method, and apply them according to the scope of activity and decision-making need. This approach allows marketers to make full use of their measurement toolkits, balancing granular experimental data with broader analytical perspectives. Doing so allows for comprehensive coverage of marketing activities and actionable, incrementality-grounded insights.