The modern marketer’s measurement toolkit has arrived.

The end goals themselves remain unchanged – effective measurement should improve the quality of decision-making at the strategic and tactical levels. Yet, in the face of signal loss from cookie deprecation, and a rising demand for statistical rigour, marketers are under pressure to maintain optimisation agility and prove (instead of claim) the impact of marketing to senior leadership.

In the past year, the resurgence of marketing mix modelling, increased emphasis on incrementality, and new-found resilience of data-driven attribution (DDA) have transformed the measurement landscape. Coupled with the accessibility of machine learning and first-party data integration, they collectively present opportunities for organisations to rise to these new challenges, and finally show last-click attribution out the door.

The opportunities include, but are not limited to:

- Fortifying measurement with 1st party identifiers. This improves the volume of observable data required for both attribution and user-based incrementality experiments in the face of signal loss from 3rd party cookie deprecation.

- Establishing causality to demonstrate business impact. This allows marketers to rise above correlation, identify actual lift from campaigns and tactics, and pinpoint results that would not have occurred otherwise.

- Holistically evaluate marketing activity. Take into account paid, owned and earned channels as well as other factors – seasonality, product and price updates, etc – to create a comprehensive view of marketing’s contribution to sales.

However, no single measurement method can independently address all three of these use cases on their own – the everything everywhere all at once, single source of truth is but a marketer pipe dream.

Instead, advanced marketers will become adept at situationally applying the right method to improve decision making at varying levels. At the same time, marketers with smaller budgets (<$5M / year) and fewer marketing channels will find a combination of DDA and incrementality experiments sufficient to upgrade their measurement approach.

All in all, the three methods come together to provide holistic coverage of marketing activity, as well as insights that produce actionable strategic (portfolio budget planning) and tactical (channel and tactic optimisation) action. Companies that are able to implement strong marketing measurement programmes are 44% more likely to beat revenue goals.

Last-click attribution, like last hits in Dota, ignores all other contributing efforts to a conversion

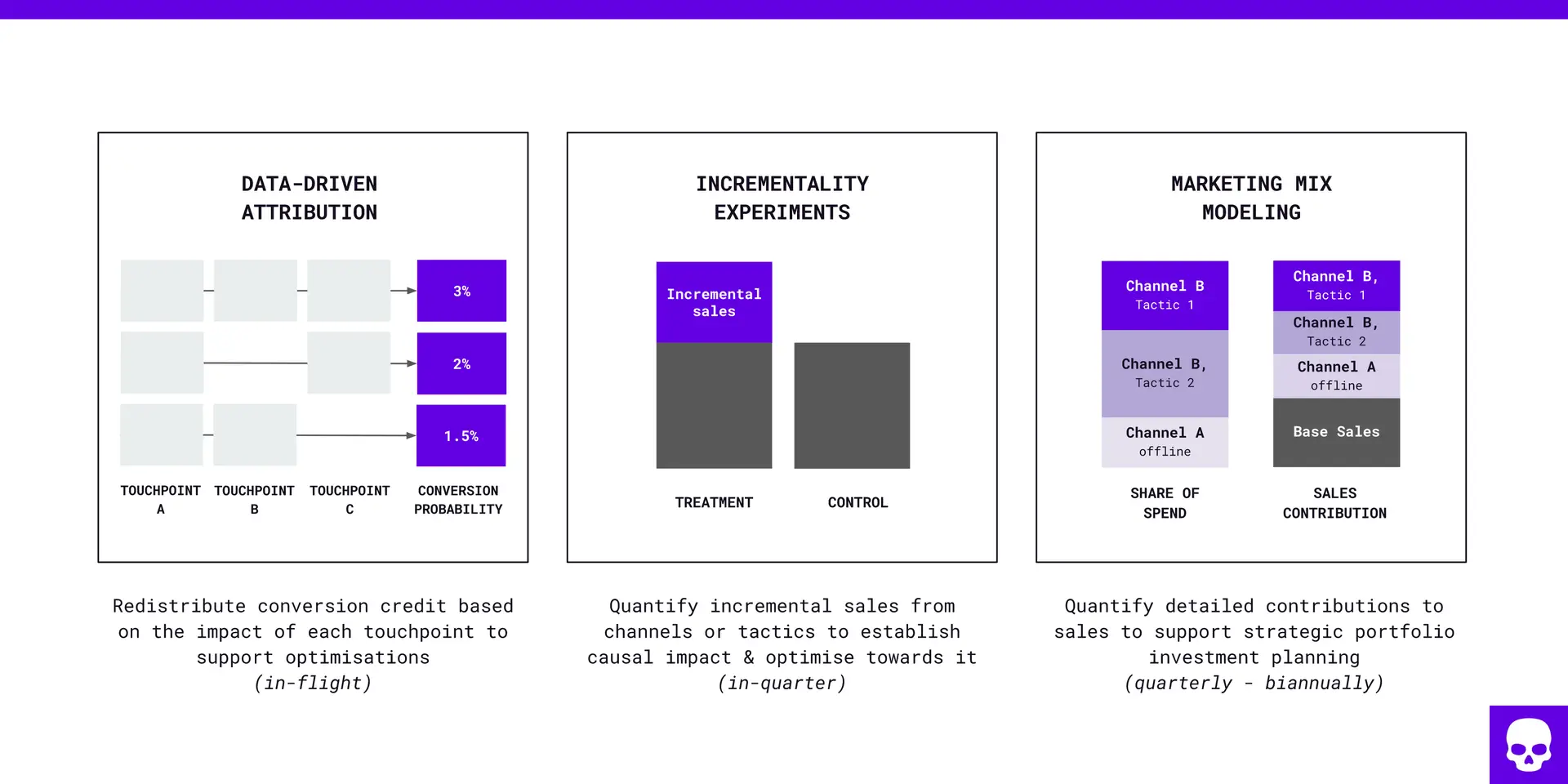

In this article, we’ll examine each measurement method – diving into what they are, how to enable them, and how they create synergy when deployed in the right contexts. Let’s start with an illustration of each method (Figure A) and an overview of how they work.

Figure A – Measurement methodology overview

Data-Driven Attribution (DDA)

What is it

- Uses machine learning to analyse and redistribute conversions. Statistical models are used to analyse more granular parameters (device, ad formats, keywords, etc.) and removal effects are used to identify converting vs non-converting paths.

- An evolution of multi-touch attribution models, which applies arbitrary rule-based distribution (first-touch, last-touch, equal-weighted, time decay, etc.) to conversions based on time or the position of events on the path to conversion

Ways to enable

- DDA is accessible via analytics tools such as Google Analytics and Adobe Analytics, or even natively within media buying platforms like Google Ads.

- Automated models are built using data ingested from marketing channels and customer touchpoints

- Marketers can increase model resilience to cookie deprecation by sharing durable identifiers (e.g. hashed emails) from customer touch points to increase the total observable data for optimisation.

Use it to

- Understand which channels and touchpoints delivered the highest impact on the business. Compare DDA with your existing attribution model (e.g last click) to identify optimisation opportunities.

- Optimise towards DDA-modeled conversions manually or using real-time bidding (available via GA4 + Google Ads). DDA provides the highest optimisation agility relative to incrementality and MMMs.

Incrementality Experiments

What is it

- Randomised controlled trials (RCTs) are used to rigorously measure the causality of marketing investments.

- Uses treatment and control groups created at the user and/or geographical level to facilitate the experiment.

Ways to enable

- Brand and conversion lift studies identify the incremental lift driven by individual channels. The former measures awareness or intent and the latter lower funnel outcomes. Many media platforms provide these measurement solutions natively.

- Geo experiments such as matched market testing can be used to further uncover cross-channel incrementality (ex: how switching off a channel in one city impacts incremental sales when compared to a similar control city).

- First party audiences can be used to create treatment/control groups from known customers. This method mainly supports hypotheses relating to nurture and retention campaigns. The audience segments can be consistently applied across channels for cross-channel findings.

Use it to

- Understand counterfactual gains from media campaigns (i.e. what business impact would be lost if media campaigns did not run?).

- Reallocate campaign budgets according to the relative size and frequency of the lift observed across channels. Adjust campaign tactics and levers to deliver higher lift.

- Stabilise learnings over a minimum of two consecutive quarters of repeated tests before redirecting budgets or channels towards new hypotheses.

Marketing Mix Modeling

What is it

- Analytical techniques used to identify the contribution of sales driven by marketing using statistical models that analyse online and offline channels over longer time periods than DDA or experiments.

- Modern versions employ advanced models that can analyse granular data and external factors more flexibly, reducing analyst bias without relying on personal identifiers.

Ways to enable

- In-house: Requires time and resource investment to handle data preparation, modeling and analysis. Open source models such as Meta’s Robyn or Google’s Meridien provide analysts with easier access to high quality models.

- Measurement software: Companies like Rockerbox or Lifesight provide end-to-end solutions. Many offer both proprietary and open source models, media channel APIs for faster data preparation, and streamlined dashboards. Pricing models can be either subscription or project-based.

- Scope and complexity can vary widely: Projects can take anywhere between 2-6 months to complete, and can be further calibrated with experiment data to reflect causal effects. Data & Analytics service providers such as Skeleton Key offer flexible partnership options to guide you through the process.

Use it to

- Identify the contribution of marketing channels across different campaign types (offline, online, paid, owned, earned, promotions).

- Evaluate how various factors can influence sales outcomes and thus investment decisions. These can include but aren’t limited to geographical disparities, product segments, seasonality, and brand campaign halo effects.

- Strategically allocate budget across performance and brand portfolios, channels, and/or tactics. This integrated view considers investment saturation and budget scenarios to guide forecasting and optimisation decisions. For most organisations, this process occurs biannually, though some large advertisers may operate on a quarterly cadence.

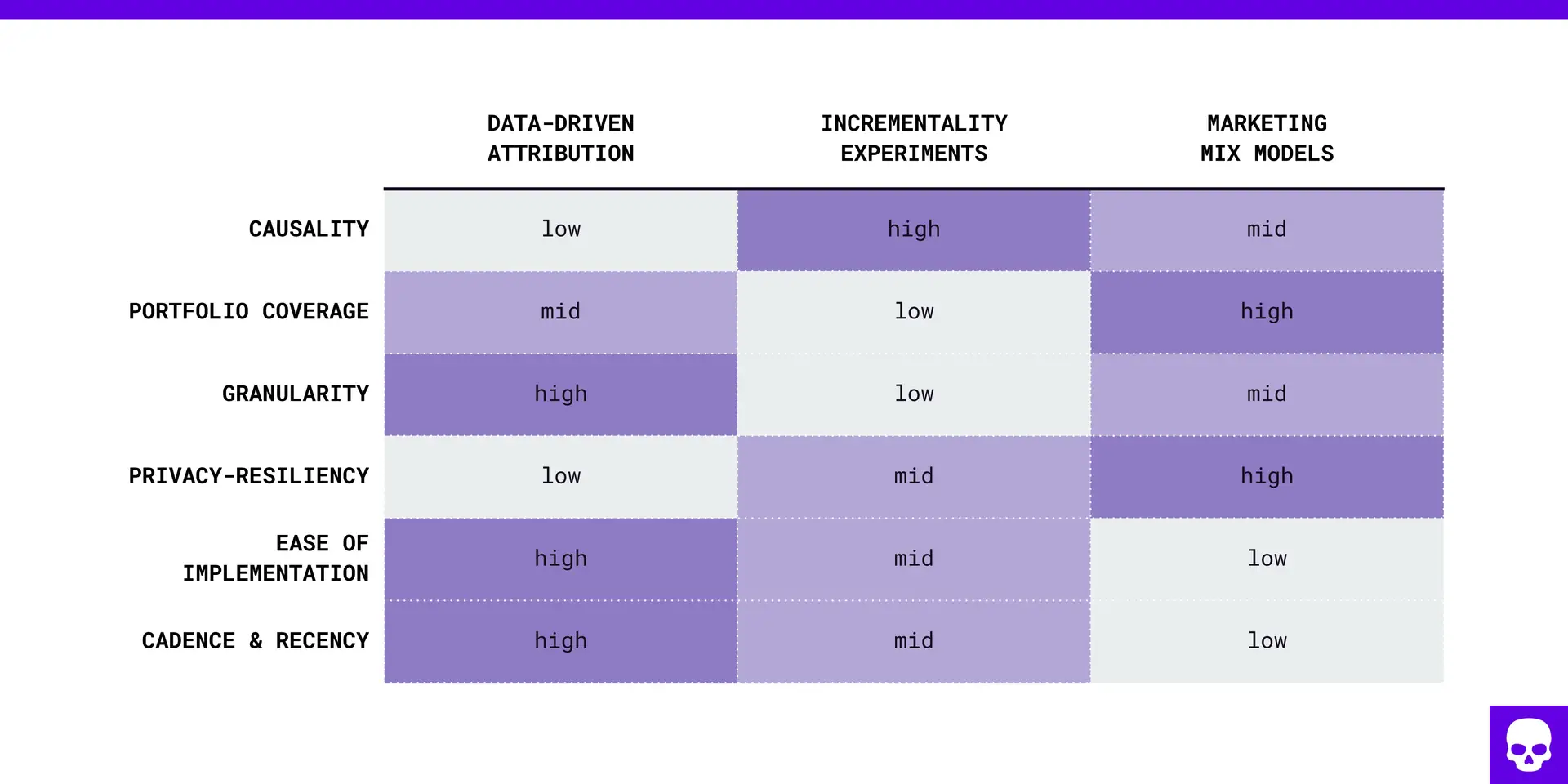

Each method is a critical puzzle piece

Each method presents its own strengths and weaknesses. That said, they are able to complement one another and fill gaps in terms of data granularity, channel coverage and optimisation requirements in the current privacy-first climate. We examine them here (Figure B):

Figure B – Measurement methodology strength/weakness comparison matrix

Incrementality testing is considered the gold standard for measurement across many scientific disciplines. However, it comes with certain limitations. Experiments are conducted with a fixed campaign duration and therefore provide point estimates, which presents tradeoffs in speed and continuity. Detecting significant effects also requires sufficient scale, limiting the number of experiments that can be conducted each quarter. As a result, marketers may often find themselves trying to stabilise higher-level learnings (e.g. which channels drive incrementality) over multiple quarters. Consequently, investigations into more granular optimisation levers (e.g. creatives, targeting, bidding strategies) may take a temporary backseat to broader insights.

Data-driven attribution (DDA) offers a different approach which provides continuous and granular feedback from fast-moving campaign data. This makes it suitable for in-flight optimisation. However, it is important to note that DDA doesn’t establish deterministic causality. Instead, it uses models that apply removal effects to identify higher impact paths or channels, inferring causal relationships. Advanced marketers are taking DDA a step further by increasing observability with first party data. Moreover, they’re testing DDA and smart-bidding against more meaningful goals that generate business value (e.g. sales, LTV, retention), moving beyond surface-level vanity metrics.

Marketing Mix Models (MMMs) become crucial for marketers managing a larger number of paid, owned, and earned channels with scaled spends often exceeding ~$10 million annually. These models provide a holistic view of sales contribution, allowing marketers to validate and optimise their investments over extended time horizons. While MMMs don’t require user-level data, the data preparation process can be time-consuming, especially if it exhaustively encompasses a large variety of channels and tactics. Finally, while modern MMMs provide ways to reduce analyst bias, it ultimately projects a range of probabilities, and therefore requires careful selection of input parameters and output models that reflect business realities. This challenge is more prevalent in MMMs than in other methods, necessitating strong alignment between analysts and stakeholders in order to deliver comprehensive, unbiased and practical results.

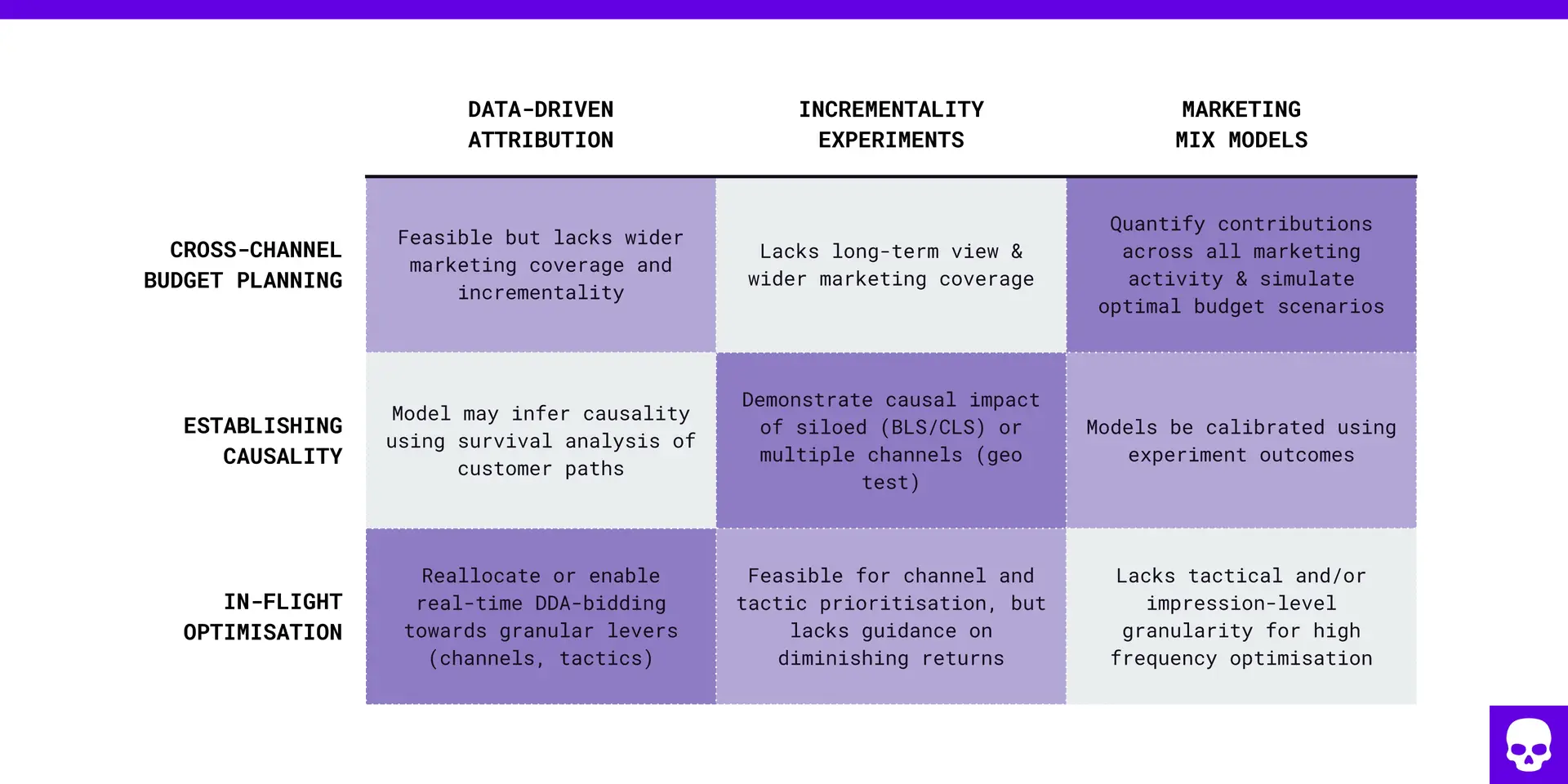

New Standards: A balanced framework for strategic and tactical decision making

Just as GPS systems triangulate an object’s position using its latitude, longitude and altitude, marketers can use MMMs, incrementality and attribution to generate holistic insights and guide decision-making at three different levels: in-flight optimisation, establishing causality and portfolio budget planning. Figure C below illustrates how they compare with one another:

Figure C – Measurement methodology decisioning matrix

Mature marketers employ at least two of these three methods, striking an optimal balance between statistical rigour, sophistication and actionability. To get started, marketers should familiarise themselves with current deployment methods, understand the data and measurement scopes of each approach, and recognise the relative strengths of limitations of each methodology. Doing so allows them to:

- Create a framework aligning appropriate methods with specific decision-making needs.

- Fortify selected methods by plugging gaps in tracking, channel coverage and processes.

- Unify resources and capabilities, motivate stakeholders, and meet aligned expectations

Incrementality as ground truth for triangulation and calibration

While “triangulation” uses multiple measurement methods to comprehensively answer different questions at strategic and tactical levels, “calibration” goes a step further by tuning measurement models to increase precision. Although relatively new, both approaches are gaining momentum. Google’s latest playbook recommends a unified approach that cross-calibrates the three measurement methods, while Meta encourages calibration of its open-source MMM model with experiment data.

Using triangulation and calibration, marketers are able to eliminate weaknesses of individual measurement methods and create synergistic loops of evidence, experimentation and optimisation. In our next article, we’ll discuss the creative possibilities and pitfalls of these approaches, as well as the importance of causality as the north star for making decisions of maximum rigour and quality.