The marketing measurement landscape is undergoing rapid transformation, driven by demands for stronger customer privacy and greater evidence-based rigour.

Attribution, Incrementality and Marketing Mix Modeling are evolving swiftly to meet these new realities, changing how marketers holistically and objectively evaluate the business impact of marketing.

In this guide, we’ll explore the key evolutions in these methodologies and how an incrementality-led approach can unify them within a framework that empowers marketers to make higher quality strategic and tactical decisions. By the end, we’re confident that you’ll know more about core concepts of modern marketing measurement than most of your peers.

To skip directly to specific sections, please use the following links:

- North star KPIs deserve north star measurement

- Last-click marketers must evolve

- Raising the bar with modern measurement methods

- Measurement methods explained

- Fortifying attribution for the privacy-first era

- Harnessing the MMM resurgence

- Proving marketing impact with incrementality

- The incrementality-led measurement framework

Estimated reading time: 23 minutes

Ready? Great, let’s dive in.

North star KPIs deserve north star measurement

Companies align everything from product development to sales strategies to key goals such as revenue, sales margins, daily active users (DAUs) and more. For marketing teams, this means balancing both long-term brand building and short-term activations to ensure both demand generation and capture.

The complexity of this task can be immense. Marketing activities can span a vast range of online and offline channels, owned and earned media, influencer partnerships, and more. Success metrics are equally diverse, encompassing awareness, reach, frequency, attention, engagement, and ultimately, conversion.

In this dynamic landscape, being able to holistically assess and prove marketing’s impact on the bottom line is crucial. Not only to defend existing marketing budgets, but also to make the case for increased investment.

Those who can clearly connect marketing activities to business outcomes help CFOs recognise marketing’s strategic value, elevating it from an operational expense to a growth driver.

And the data backs this up, as companies with strong measurement programs are 44% more likely to exceed revenue targets.

Last-click marketers must evolve

Despite its inherent limitations, last-click attribution has become the default model for measuring digital marketing success – a practice that is unfortunately popular among many of the world’s largest brands.

Third-party cookies, once a cornerstone of digital advertising, played a significant role in fueling this over-reliance. Its widespread use made attribution easily accessible, and last-click attribution, with its simplicity, quickly became the default choice. The practice unfortunately remains prevalent even among many of the world’s biggest brands.

However, the model’s inherent bias towards the final interaction prior to conversion means that marketers effectively disregard all other touchpoints in the customer journey. Conversion credits are also disproportionately allocated to demand capture at the expense of demand generation activities. This can lead to suboptimal investments, focusing on users already near conversion instead of nurturing potential customers.

That said, the viability of attribution-based measurement is being challenged by an ever-growing loss of data signals. The shift towards privacy, accelerated by events like Cambridge Analytica and legislation such as GDPR, has two main drivers:

Increased user consent requirements for personal data

- Privacy regulations like GDPR and CCPA mandate informed consent for the collection and use of personal data, with growing global adoption beyond the EU and California.

- Operating systems, such as iOS, require explicit user permissions for 3rd party cookie-based advertising. Chrome has announced similar plans in July 2024.

Deprecation of third party identifiers

- Browsers are (directly and indirectly) phasing out 3rd party cookies and/or reducing the lifespan of first party cookies when third party tracking is present (as seen in Safari).

- App tracking signals (e.g. iOS MAC addresses, background location tracking) are increasingly being blocked.

The impact of these changes is clear: user opt-outs are happening on a massive scale (Figure A). We may not be in a completely cookieless world, but we’re certainly in one that has far less of them.

Figure A – Opt-out rates, ad blockers and identifier deprecation

Exceeding 70%, the high rate of users opting out of sharing device IDs (IDFA) on iOS indicates a similarly significant signal loss when Chrome rolls out cookie consent preferences to its users. When this occurs, the viability of last-click, view-through, and multi-touch attribution will further deteriorate.

The implications here are two-fold:

- In order to improve decision-making, marketers need more rigorous ways to distribute conversion credit than last-click counting.

- Third party cookie reliant user-level measurement is degrading, necessitating the exploration of alternative approaches.

Raising the bar with modern measurement methods

The good news is that the measurement landscape has rapidly transformed in the past few years to meet these needs.

There is the resurgence and open-sourcing of econometric mix models, an increased emphasis on incrementality, and a newfound resilience in attribution. The greater availability of compute power is also driving the commodification of advanced measurement models and solutions, making them accessible to marketers everywhere.

Taken together, the modern measurement toolkit presents three opportunities:

Fortifying attribution with durable identifiers – This improves the volume of observable data required for both attribution and user-based incrementality experiments in the face of signal loss from 3rd party cookie deprecation.

Establishing causality to demonstrate business impact – This allows marketers to rise above correlation, identify the causal impact of campaigns and tactics by pinpointing results that would not have occurred otherwise.

Holistically evaluating marketing activity – By capturing paid, owned and earned channels as well as other factors – seasonality, product and price updates, etc. – within marketing mix models, marketers create a comprehensive view of marketing’s contribution to sales.

Measurement methods explained

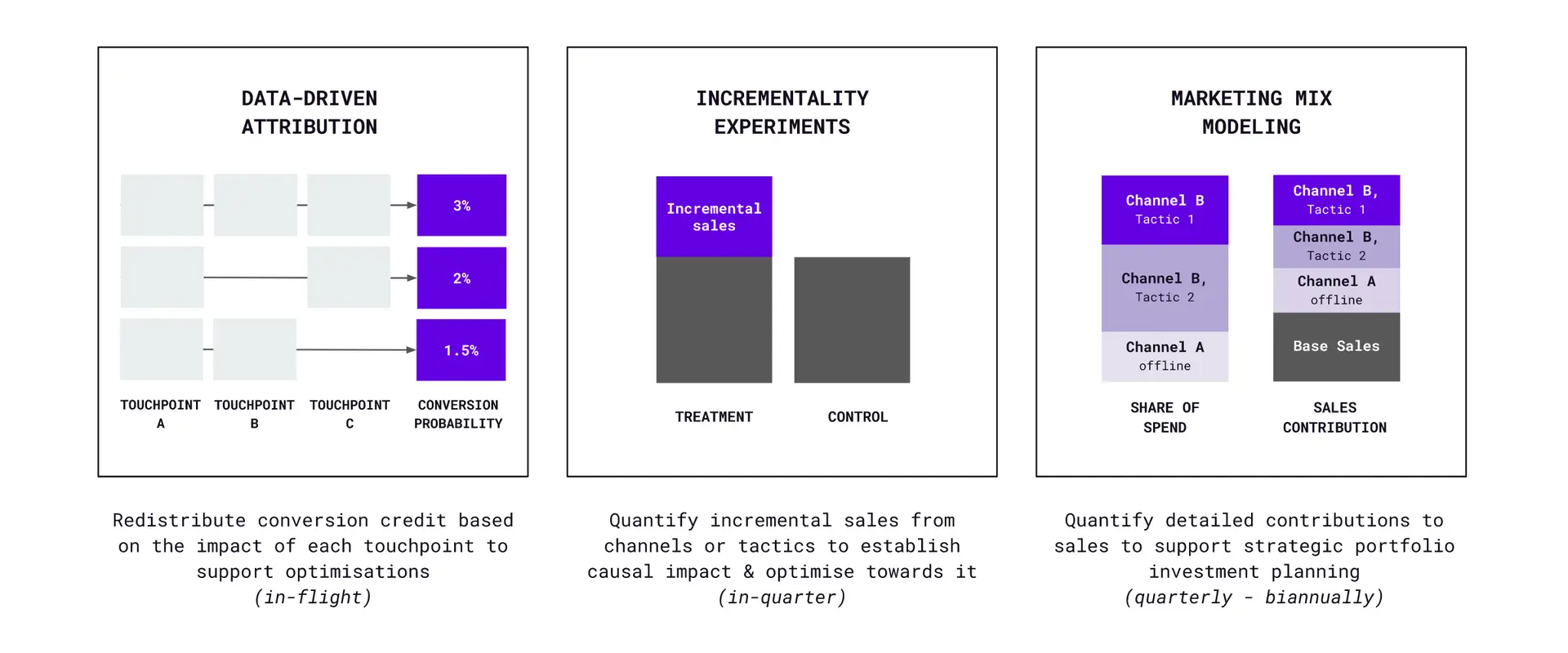

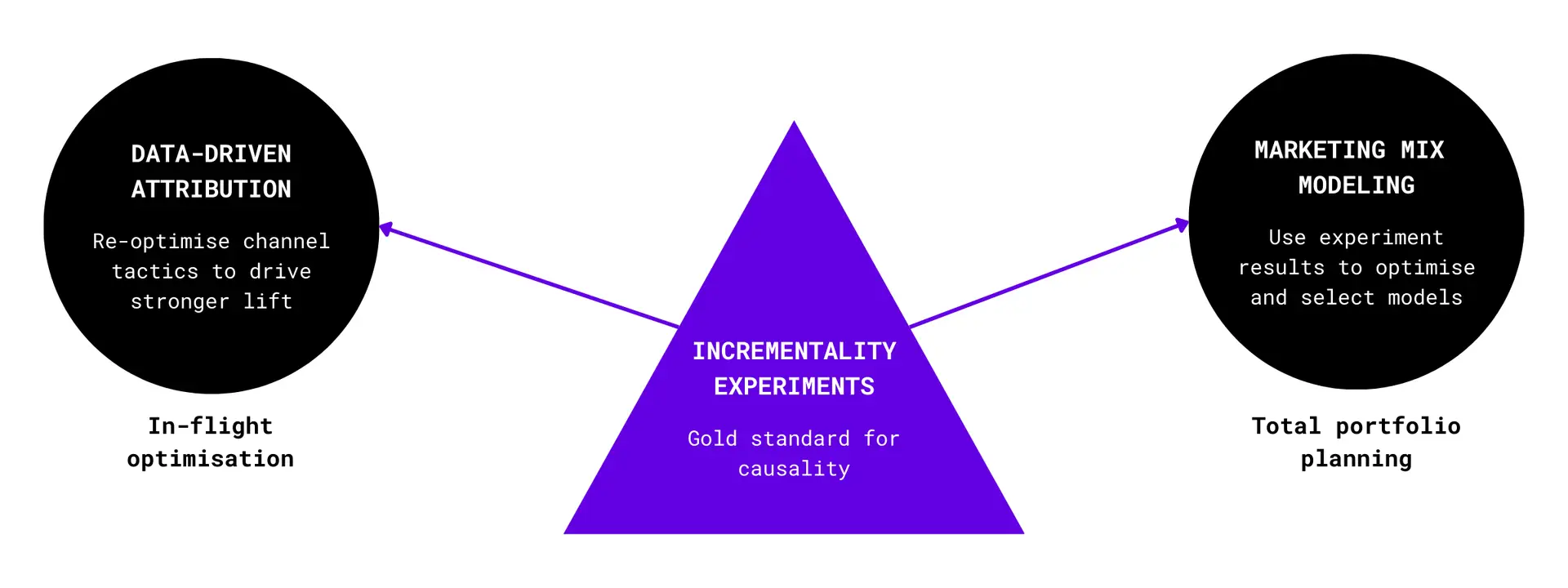

Data-Driven Attribution, Marketing Mix Modeling and Incrementality Experiments make up the modern measurement triad, allowing marketers to seize the aforementioned opportunities.

We explore what they are, how they’re enabled, and in later sections, how they can work together to create synergy when deployed in the right contexts.

Let’s start with a TL;DR illustration of each method (Figure B):

Figure B – Data-driven attribution, incrementality experiments and marketing mix modeling

Data-Driven Attribution (DDA)

What it is

- An attribution method that uses machine learning to analyse and reallocate conversions. More specifically, DDA leverages statistical models to analyse more granular parameters (device, ad formats, keywords, etc.) and removal effects to identify converting vs non-converting paths.

- An evolution of older multi-touch attribution (MTA) models, which applies arbitrary rule-based distribution to conversions based on time or the position of events on the path to conversion (first-touch, last-touch, equal-weighted, time-decay, etc).

Ways to enable

- DDA is accessible via analytics tools such as Google Analytics and Adobe Analytics, or even natively within media buying platforms like Google Ads.

- Automated models are built using data ingested from marketing channels and customer touchpoints.

- Marketers can make models more resilient to cookie deprecation by sharing durable identifiers (e.g. hashed emails) from customer touchpoints to increase the total observable data for optimisation.

Use it to

- Understand which channels and touchpoints delivered the highest impact on the business. Compare DDA with your existing attribution model (e.g. last click) to identify optimisation opportunities.

- Optimise towards DDA-modelled conversions manually or via real-time bidding (available in Google Analytics if you’re a heavy user of the Google ecosystem, or via custom solutions from measurement vendors). DDA provides the highest optimisation agility relative to incrementality experiments and MMMs.

Incrementality Experiments

What it is

- Randomised controlled trials (RCTs) used to rigorously measure the causality of marketing investments.

- Treatment and control groups are created at the user and/or geographical level to facilitate the experiments.

Ways to enable

- Brand and conversion lift studies identify the incremental lift driven by individual channels. The former measures awareness or intent and the latter measures lower funnel outcomes. Many media platforms provide these measurement solutions natively.

- Geo experiments such as matched market testing can be further used to uncover cross-channel incrementality (e.g. how switching off a channel in one city impacts incremental sales when compared to a similar control city).

- First party audiences can be used to create treatment/control groups from known customers. This method mainly tests hypotheses relating to nurture and retention campaigns, where audience segments can be consistently applied across channels for cross-channel findings.

Use it to

- Understand counterfactual gains from media campaigns (i.e. what business impact would be lost if media campaigns were not run?).

- Reallocate campaign budgets according to the relative size and frequency of the lift observed across channels. Adjust campaign tactics and levers to deliver higher lift.

- Stabilise learnings over a minimum of two consecutive quarters of repeated tests before redirecting the same budgets or channels towards new hypotheses.

Marketing Mix Modeling (MMM)

What it is

- Analytical techniques used to identify the contribution of sales driven by marketing using statistical models that analyse online and offline channels over longer time periods than DDA or experiments.

- Modern versions employ advanced models that can analyse granular data and external factors more flexibly, reducing analyst bias without relying on personal identifiers.

Ways to enable

- In-house – Requires time and resource investment to handle data preparation, modelling and analysis. Open source models such as Meta’s Robyn or Google’s Meridien provide analysts with easier access to high quality models.

- Measurement software – Companies like Rockerbox or Lifesight provide end-to-end solutions. Many offer both proprietary and open source models, media channel APIs for faster data preparation, and streamlined dashboards. Pricing models can be either subscription or project-based.

- Scope and complexity can vary widely – Projects can take anywhere between 2-6 months to complete, and can be further calibrated with experiment data to reflect causal effects. Data & Analytics service providers such as Skeleton Key offer flexible partnership options to guide you through the process.

Use it to

- Identify the contribution of marketing channels across different campaign types (offline, online, paid, owned, earned, promotions).

- Evaluating how various factors can influence sales outcomes (and thus investment decisions). These include but aren’t limited to geographical disparities, product segments, seasonality and brand campaign halo effects.

- Strategically allocate budget across performance and brand portfolios, channels, and/or tactics. This integrated view considers investment saturation levels and budget scenarios to guide forecasting and optimisation decisions. For most organisations, this process occurs biannually, though some large advertisers may operate on a quarterly cadence.

For additional tips on how to get started with MMMs, check out this post by media and adtech veteran Dan Elddine.

Fortifying attribution for the privacy-first era

Closed-loop attribution faces an uphill battle of signal and identity attrition.

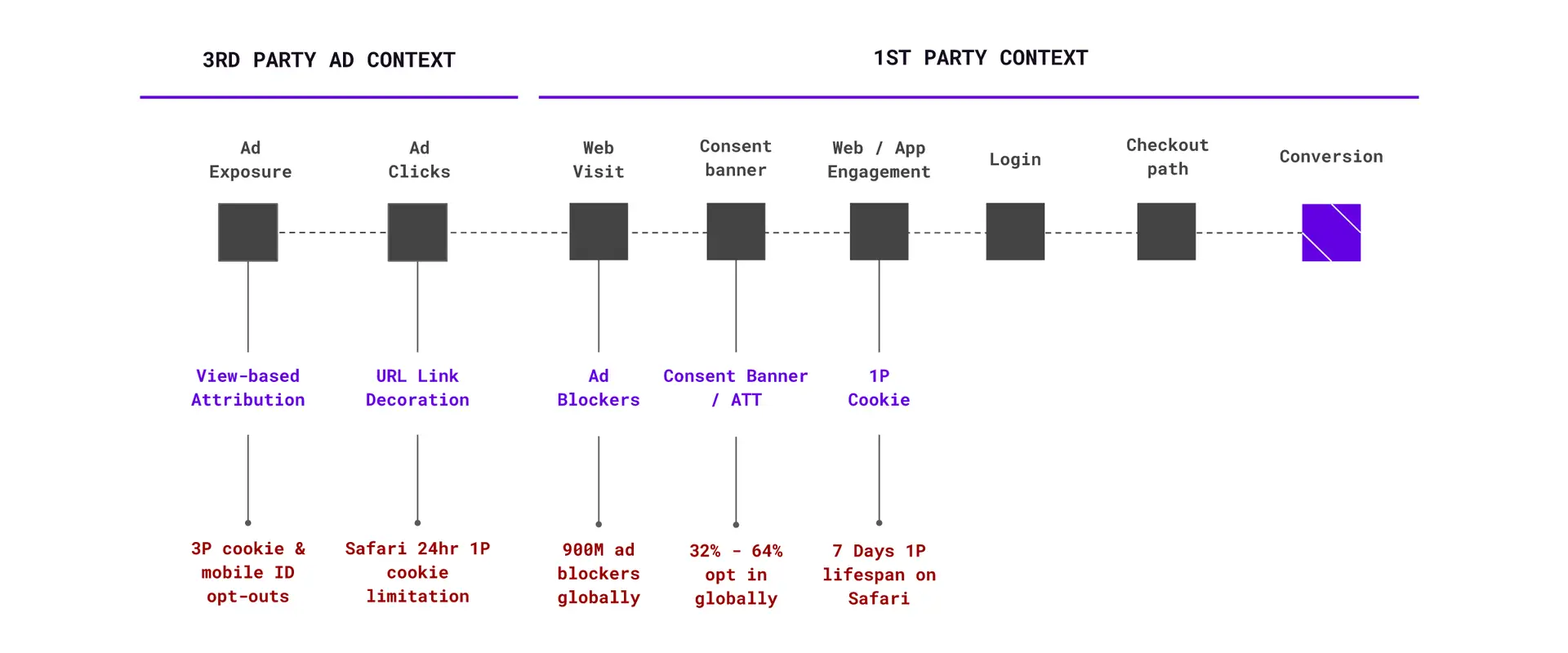

Attribution models are heavily dependent on the ability to track events along the customer’s path to purchase. If we overlay user privacy developments (discussed in the “Last-click marketers must evolve” section) onto the customer journey, it becomes clear that attribution faces signal loss from identity attrition occurring across multiple checkpoints:

- Smaller pool of ad event data due to user tracking opt-outs and ad blockers.

- Smaller pool of first party data due to user consent opt-outs.

- Shorter duration of first party data storage if 3rd party trackers are present.

This is illustrated below in Figure C:

Figure C – How the privacy shift intervenes closed-loop attribution

Put simply, attribution models have less and less data to work with. Despite this, they remain the go-to measurement method for many small and medium-sized businesses (SMBs) that lack the budgets, scale, or business model suitability for more complex methods like MMMs or large-scale incrementality experiments. This also means that these SMBs have more to lose from cookie deprecation, as it impacts critical functions including end-to-end tracking, targeting and real-time optimisation.

In response, several industry developments have focused on revitalising attribution and evolving it for today’s privacy-first landscape:

Durable identifiers

- Used to bridge gaps in the conversion path with persistent IDs (typically email addresses) collected from first party interactions.

- Advertisers and publishers share these durable IDs with media platforms or universal identifiers (e.g. ID5, UID 2.0, RampID) to enable attribution and audience targeting across third party contexts.

Modeling

- Used to estimate conversions generated by non-observable users. It answers questions like: How many impressions or clicks were missing user IDs? How many conversions did those interactions drive?

- Also widely employed in multi-touch attribution to reduce bias from arbitrary rules-based distribution of conversion credit (see “Measurement methods explained” section).

Privacy enhancing technologies

- A collection of technologies used to enable the secure collection and sharing of customer PII (e.g. email, phone number). between brands and marketing partners.

- These include but aren’t limited to encryption methodologies , adding noise to datasets, and aggregating individual users into cohorts to prevent identification.

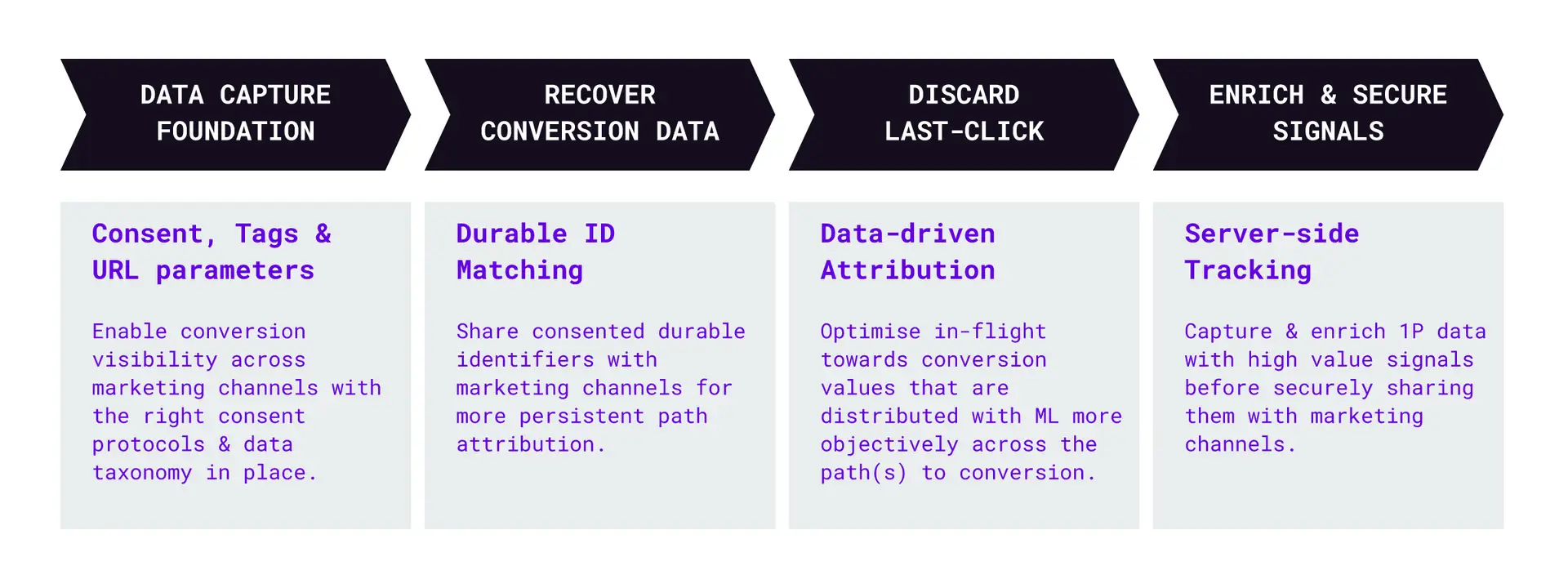

Taking attribution further

Adopting these new attribution technologies can lead to significant gains for marketers, with a potential 30-50% of conversions and a 10-15% improvement in cost-per-acquisition. Beyond foundational data capture methods (e.g. consent management, tag management, URL parameter tools), marketers should consider three practical upgrades:

Recover conversion data

- Perform durable customer identifier matching by upgrading standard platform pixels or establishing API connections from your own server.

- Walled garden media platforms such as TikTok, Meta and Google Ads provide various durable ID matching solutions (e.g. “Advanced Matching”, “Enhanced Conversions”).

- Open web and retail media networks can leverage ID graphs (e.g. UID 2.0 on The Trade Desk, Shopper Graph on Criteo) to ingest durable identifiers from marketers to reinforce attribution, targeting and bidding.

Discard last-click attribution

- Enable data-driven attribution (DDA) to distribute conversion credit more objectively across channels and ads.

- Google Analytics provides a free version that is tightly integrated with the Google Ads ecosystem for real-time bidding. However, marketers with diverse channel investments will require more neutral options.

- Many site, product or app analytics tools (e.g. Adobe, Amplitude, Singular) provide low-code DDA models and dashboards. However, these may lack view-based data or real-time bidding integrations.

- Newer measurement vendors like Rockerbox and Northbeam offer the best of both worlds by providing a neutral measurement solution alongside access to view-based attribution from walled garden media platforms for a more complete view of the customer journey.

Enrich & secure data signals

- Enable server-side tracking to share online and/or offline customer events and consented identifiers with marketing and media platforms from your own secure server.

- This method uses an API connection between advertiser and marketing/ media platform servers to more securely transmit relevant conversion data and identifiers.

- Marketers can use their server to enrich their data with events and identifiers from various customer touchpoints (in-store, customer calls, product data, etc.) and/or create high value events that correspond with business metrics (LTV, product margins, etc.) for optimisation within media platforms.

To recap, durable IDs provide persistent recovery of conversion data, DDA brings greater objectivity, and server-side tracking unlocks advanced security and enrichment capabilities (Figure D).

Figure D – Advancing media attribution with new tools

These advancements underscore the need for marketers to build a solid foundation in data capture and consent. This means collecting critical customer path and event data responsibly and with respect for both user privacy and business objectives.

Last but not least, while the Google Chrome Privacy Sandbox remains under development, its implications are significant. With the impending cookieless future for most browsers, the industry will likely rely on the Sandbox for deterministic, aggregated attribution on Chrome (without resorting to cookies or other persistent identifiers). However, the technology is still nascent, with no clear timeline or plans for integrating data-driven or multi-touch attribution models.

For more on this subject, check out this video from our YouTube channel where we deep dive into the future of attribution.

Harnessing the MMM resurgence

MMMs are versatile but complex tools within the modern marketing measurement landscape. Its recent resurgence has been driven by its ability to provide comprehensive coverage of marketing activity with increasing granularity without relying on fast-deprecating user-level identifiers. At the same time, the proliferation of open-source models and measurement vendors is improving time-to-analyses with increased automation and reduced analyst bias thanks to more objective and transparent models.

Meta likens MMMs to the un-baking of a cake, where one would peel apart its layers to understand what makes it so delicious. With marketing, econometric models tease out the incremental impact of each activity using meaningful variations in historical data. This in turn visualises activities as individual “layers”, each sized proportionately to their contribution to the business bottom line. Saturation curves and lag effects are also computed to reflect the realities of actual marketing interactions.

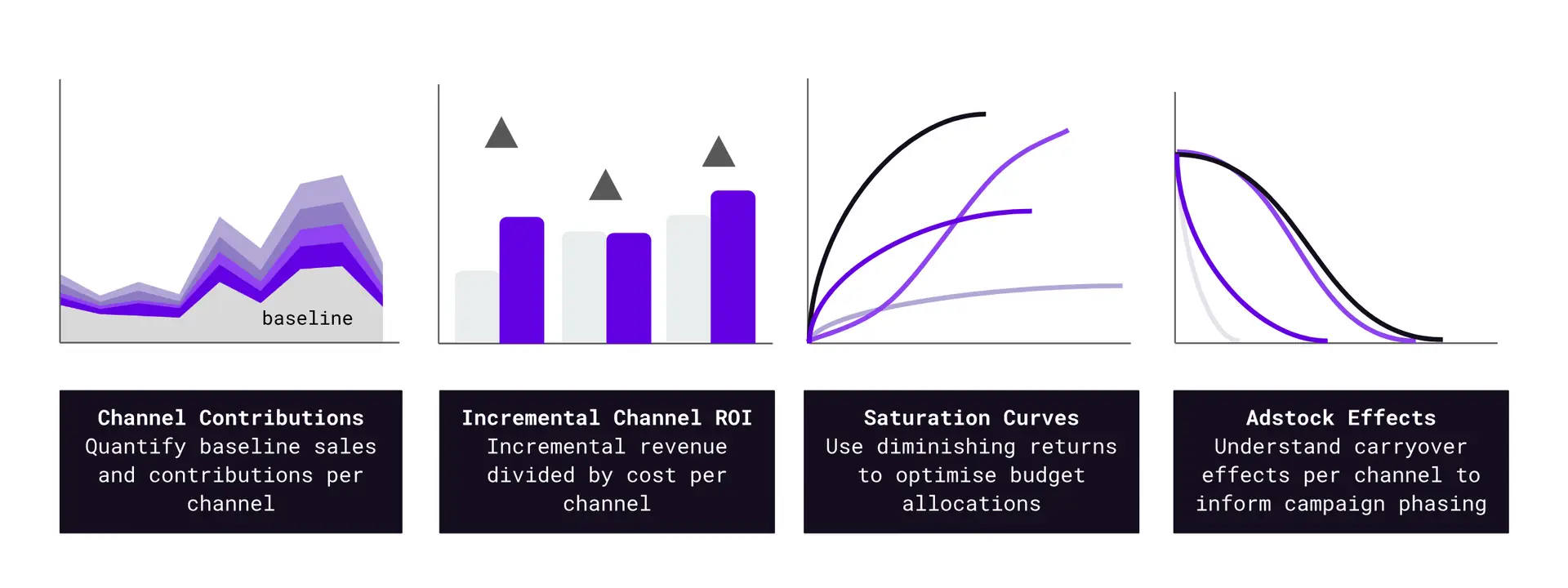

Together, the model outputs (Figure E) allow marketers to estimate, forecast, and optimise performance across their marketing portfolio*:

Figure E – Common MMM Outputs

* While MMMs consider the broader factors of the 4Ps of marketing (promotion, price, product, place), this guide focuses primarily on the promotions aspect (paid advertising and organic marketing channels).

MMMs are especially useful for...

Marketers with diverse marketing channels – MMMs can analyse both online and offline marketing channels, as well as external events.This provides flexible and comprehensive analysis for companies with a variety of scaled marketing activities, such as those in retail, automotive, and CPG industries.

Finance teams handling marketing investments – MMMs help identify sales contributions, diminishing and lagged returns, and simulate scenarios to track and improve investment allocations across marketing activities.

MMMs are less useful for...

Startups & small businesses – Without historical data, these companies will benefit more from simpler, more immediate attribution methods until they have more diversified marketing activities and sufficient data to support robust MMM outputs.

Marketers testing a new channel – The lack of scaled historical spends of a new channel means its impact will not be reliably or adequately detected in MMMs. Incrementality experiments or DDA are better suited for this purpose.

Marketers requiring real-time optimisation – MMMs quantify the impact of activities from historical data spanning at least 1-2 years. As such, they typically lack the ability to capture the granular, dynamic in-flight signals (click, attention, identifiers, etc.) required for high frequency or real-time optimisation within media channels.

Key considerations before starting

The complexities of MMMs require both proficiency in statistical modelling as well as a deep understanding of marketing strategy and tactics to derive meaningful analysis. This dual requirement gives rise to the following common watchouts for both marketers and analysts:

Select the right tool for the job – Analysts need to first be able to discern whether MMMs are suitable for the business question at hand. For example, MMMs can incorporate brand metrics to better understand its impact on sales. On the other hand, if the goal is to optimise customer paths that traverse paid and organic channels, DDA or incrementality experiments are a better fit.

Be aware of factors that affect insight quality – Common culprit variables include a lack of campaign variation (e.g always-on activity), highchannel correlation (e.g. TV and YouTube), and high KPI correlation (e.g. branded keyword search campaigns). Adjustments to media strategy and modelling may be required to mitigate the impact of these factors to produce more specific and/or accurate insights.

Signal granularity increases noise – While newer models promise to incorporate ad group or even creative level data, this increased granularity also introduces more noise. This in turn increases the risk of an overfitted model which reflects noise instead of clear, reliable patterns. Thus, it’s essential to manage the trade-off between insight granularity and model integrity.

Data requirements and considerations

MMMs are unique in their ability to flexibly incorporate a wide range of drivers and outcomes. However, the data requirements aren’t one-size-fits-all. They vary based on the organisation’s specific business questions, its marketing activities and goals, and the length of its business sales cycles. These requirements can be grouped in the following categories:

Marketing spend & exposure – While most models require marketing spends, exposure metrics (e.g. impressions, emails sent) are a better quantification of the activity delivered to end consumers. That said, cost is vital for computing metrics like ROAS along with acting as a fallback variable for channels without exposure data.

Marketing KPIs – These are primarily business KPIs (e.g. sales revenue, market share) or customer-specific KPIs (e.g. acquisition, retention, LTV). However, upper funnel proxy metrics (e.g. form submissions, quality leads) may be required for B2B companies with protracted enterprise sales cycles.

Situational variables – These are external factors that can impact sales performance. Examples include promotional calendars, seasonality, weather, macroeconomic events, competitor activity, and more.

[Optional] Experiment results – Some measurement vendors use results from incrementality experiments to either directly calibrate their models, or as reference for model optimisation.

Additional considerations are as follows:

Data preparation

MMMs are unique in their ability to flexibly incorporate a wide range of drivers and outcomes. However, the data requirements aren’t one-size-fits-all. They vary based on the organisation’s specific business questions, its marketing activities and goals, and the length of its business sales cycles. These requirements can be grouped in the following categories:

Sample size

One of the biggest challenges in executing MMMs is ensuring a sufficient sample size. To achieve robust results, MMMs typically require two years of weekly data or at least one year of daily data. Additionally, a large enough volume of the output KPI (ideally >100 events per week) is needed to establish a clear relationship between input and output variables.

That said, there are ways to increase sample size by strategically incorporating variability. Examples include but aren’t limited to:

- Collecting data by sub-national geo cuts

- Pooling data from similar brands within the same product category

Granularity vs. Noise

Many businesses end up running multiple models across different data cuts (e.g. geo, product, brand) to achieve the granularity needed to answer evaluation, forecasting and optimisation questions meaningfully.

For optimisation specifically, marketers generally want actionable insights at a granular level. This could mean comparing brand vs DR, prospecting vs retargeting, brand search vs generic search, ad format types and more. As previously discussed however, there’s a limit to granularity before a model is overcome by noise. To guard against this, each data cut should ideally represent a substantial share of total spend, with consistent activity across the time frame of the prepared dataset.

Selecting the the right MMM solution

Last but not least, measurement models and vendors can vary significantly based on methodology, technology and unique offerings. Differences can range from data handling, model biases, automation capabilities, and ongoing measurement services. To help you assess and decide which options best suit your company’s needs, we’ve compiled the following set of high-level questions:

Modeling approach – What’s the underlying modelling approach? What statistical techniques are employed? What are the model’s underlying assumptions?

Variable selection – How are variables selected for inclusion in the model? How are confounding variables handled?

Data collection and cleaning – How is data collected, cleaned, and prepared for analysis?

Reporting and insights – What types of outputs and services are provided? (e.g. ROI reports, response curves, investment scenarios, planning recommendations, ongoing validation, data visualisations, granularity levels)

Proving marketing impact with incrementality

The most compelling evidence demonstrates cause and effect – and that’s where incrementality shines. For marketers, it identifies business results that would not have been achieved without intentional marketing activity. Put simply: if a company cuts $x from its marketing campaigns, how much would sales suffer?

In performance marketing, incrementality quantifies the additional conversions, revenue and engagement generated. For brand marketing, it measures the incremental lift in awareness, consideration, and/or intent among target audiences. As brand building is essential for amplifying performance outcomes, a comprehensive approach measuring both is vital for marketers with substantial investments in each.

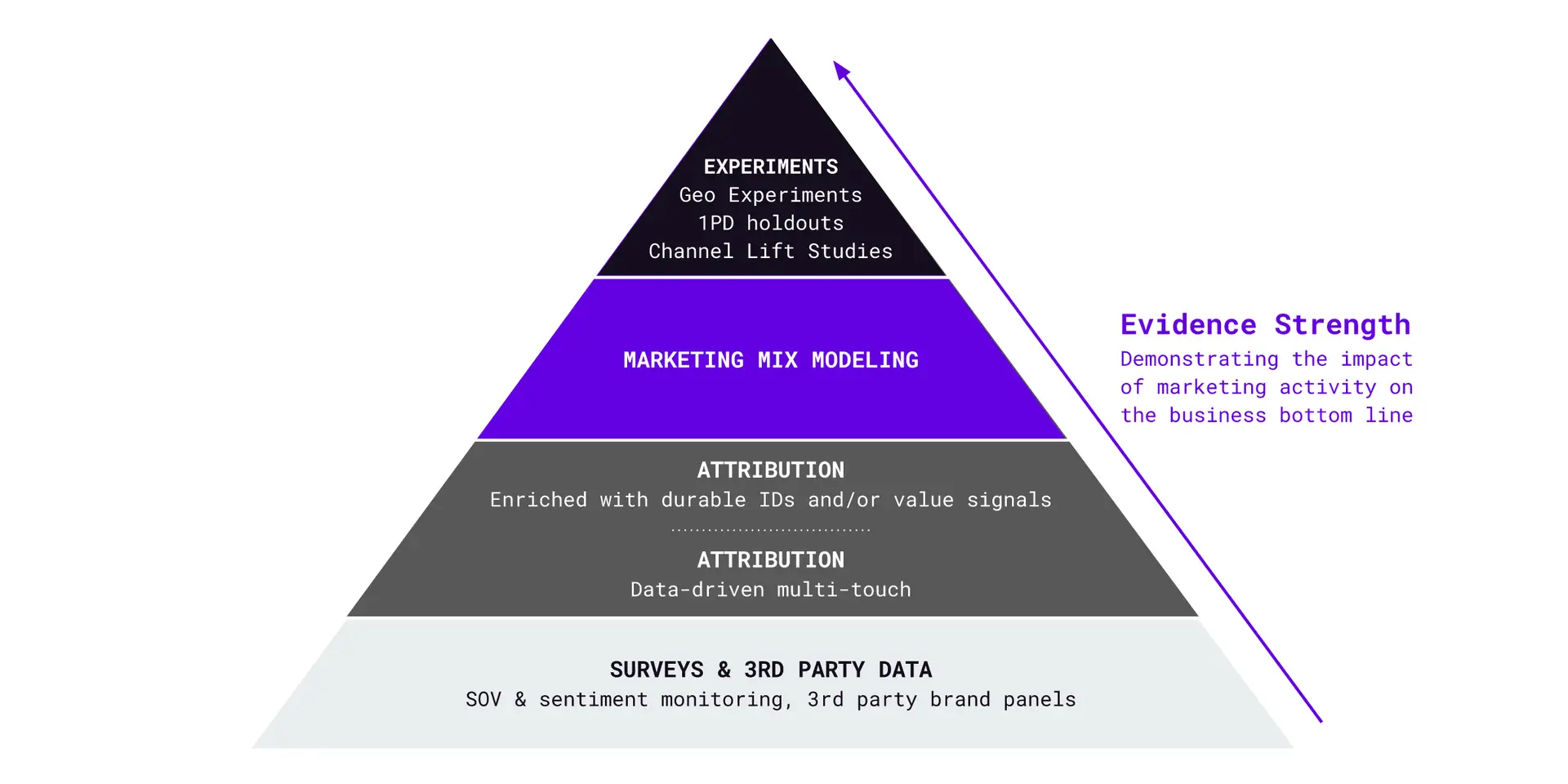

Incrementality experiments utilising RCTs are the gold standard for measuring these causal effects (see Figure F). They assess marketing’s impact on treatment and control groups, revealing whether specific channels, creatives, tactics, or budget allocations drive any incremental gains during the experiment period.

Figure F – Hierarchy of evidence in marketing measurement

There are no silver bullets

While incrementality experiments are considered the gold standard for measuring cause-and-effect across scientific disciplines, they aren’t without inherent limitations. They require time, sufficient scale to detect significant effects, and incur the opportunity cost of withholding spend for control groups (limiting the number of experiments that can be conducted per quarter). Moreover, they don’t account for diminishing returns or the decay effects that marketing campaigns experience over time.

These constraints mean that we need other complementary measurement methods to support the full breadth of marketers’ in-flight, tactical, and strategic planning decisions. Thus, a balanced approach is required to attain the end goal of incrementality-first marketing measurement.

This is where MMMs and DDA come in. MMMs and DDA analyse continuous campaign data and user-level information respectively. Together, they provide greater coverage and more granular tracking. While not considered the gold standard like incrementality experiments, each provides differentiated, valuable, and actionable insights into marketing effectiveness.

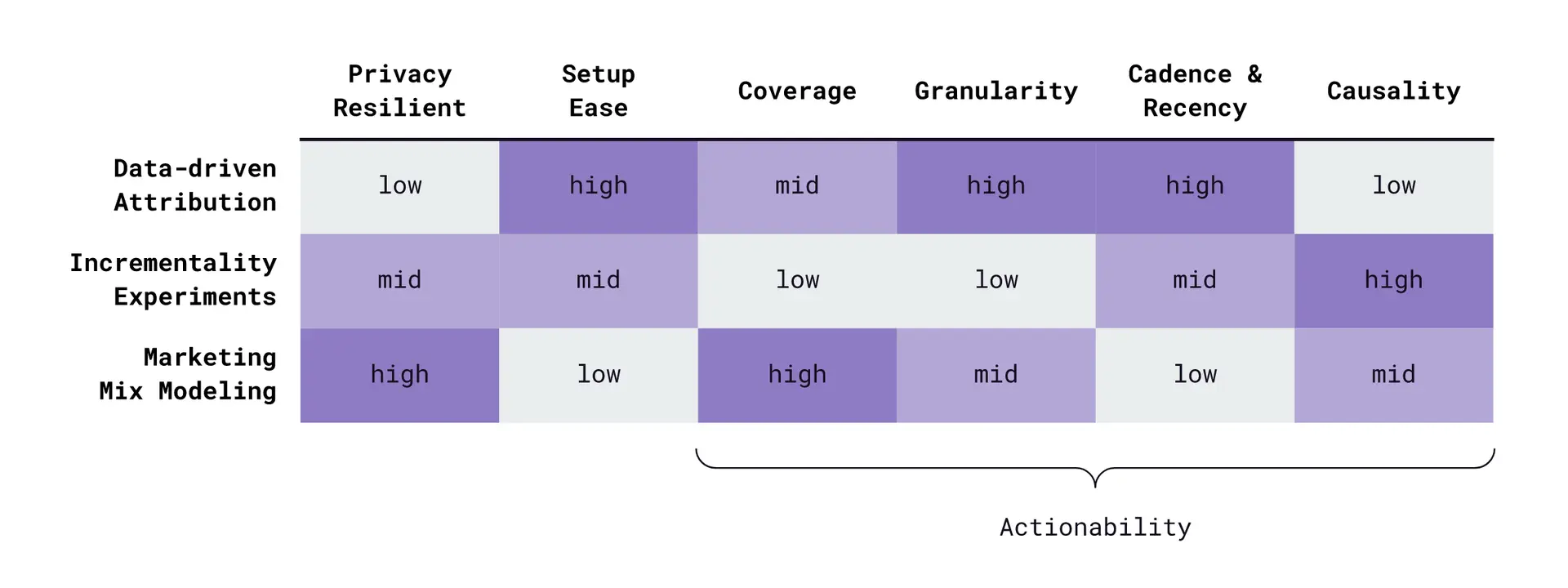

Ultimately, each method has its own strengths and weaknesses. This is summarised in Figure G below:

Figure G – Strengths and weaknesses of DDA, incrementality experiments and MMMs

Mature marketers often utilise at least two of these three methods, striking an optimal balance between statistical rigour, sophistication and actionability.

To get started, marketers should familiarise themselves with current deployment options, understand the data and measurement scopes of each approach, and recognise their respective strengths and limitations. Last but not least, ease of implementation (see Figure F) between approaches can vary widely. While many off-the-shelf, low-code solutions have/are emerging, specific requirements such as data security or custom models are better served with a build-it-yourself approach.

The incrementality-led measurement framework

As we’ve discussed, no single measurement method can address all questions across the marketing lifecycle.

Each method utilises data at varying levels of granularity and activity scopes, using distinct counting and/or modelling approaches that are subject to dwindling user consent. As such, discrepancies are an inevitable consequence and often not worth the effort to reconcile. Put simply, achieving 100% deterministic attribution – the “everything everywhere all at once” single source of truth – is an unattainable ideal.

A more fruitful approach involves a measurement system centred on incrementality. By employing a combination of appropriate methods, marketers can establish a strong foundation for evidence-based decision-making across both strategic and tactical initiatives.

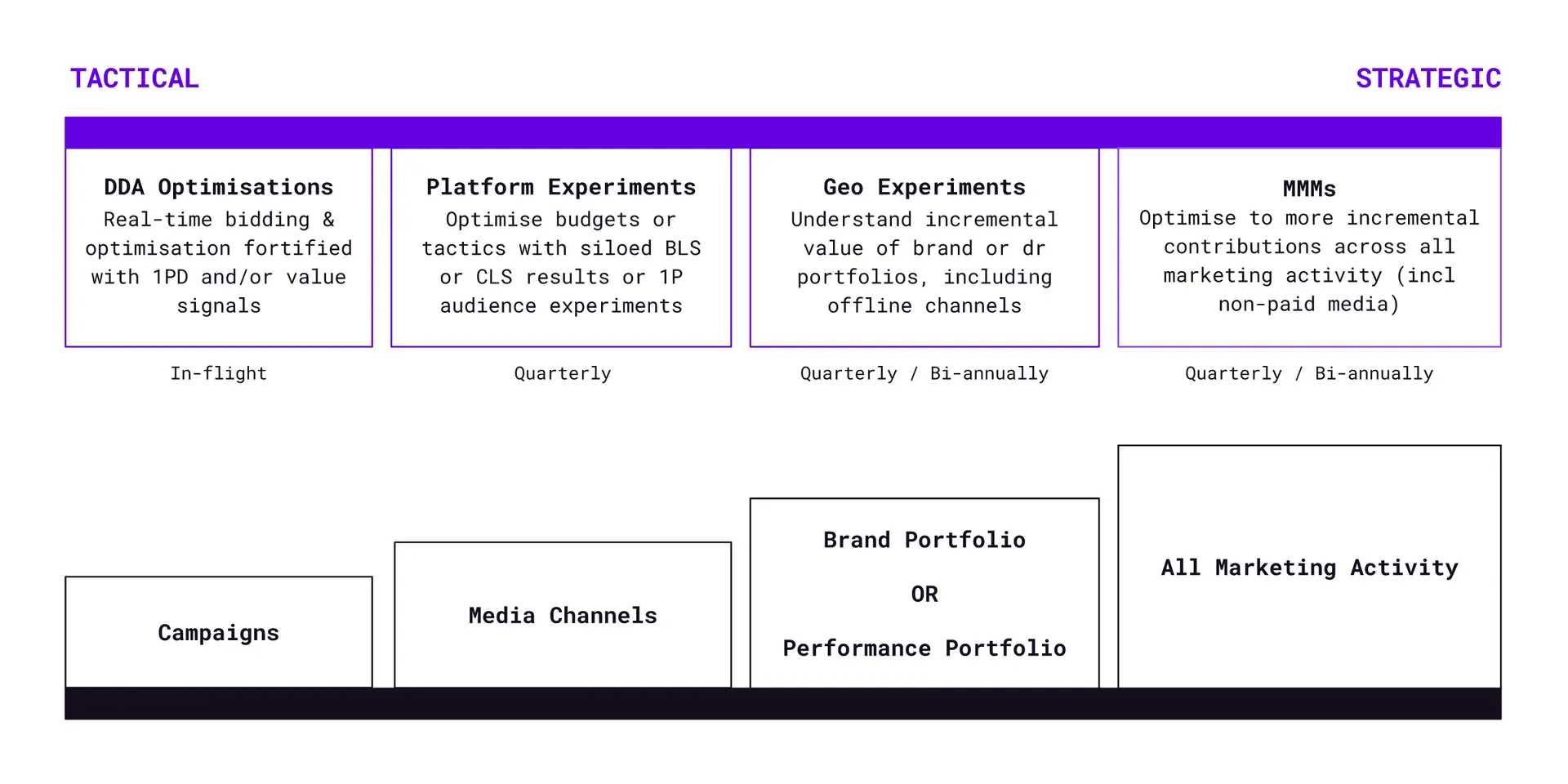

Figure H – Measurement Framework

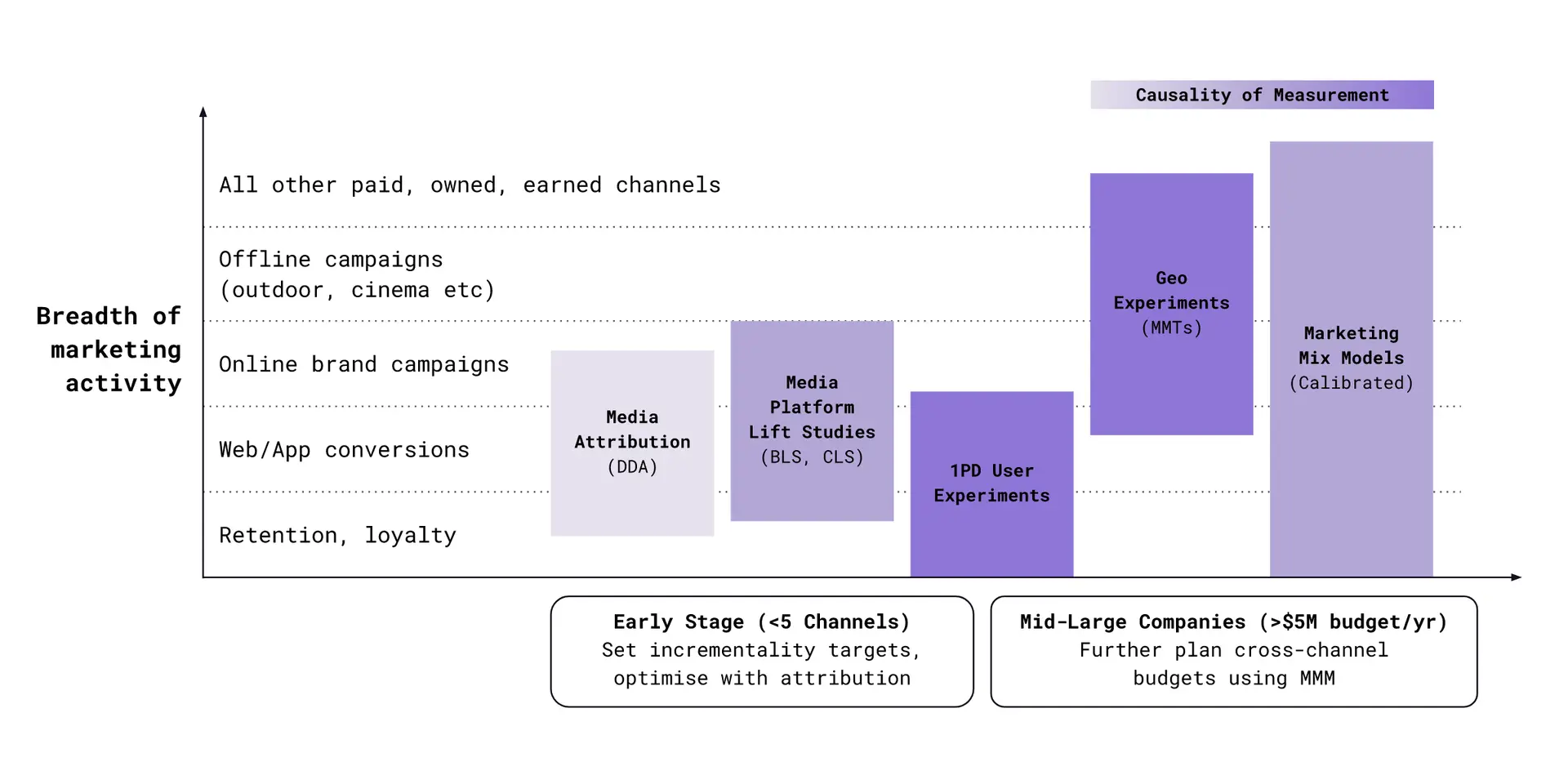

The above (Figure H) is an example of how each method plays a part in improving incrementality and coverage across an increasingly complex scope of marketing activities and decisions. We further break them down below.

Strategic goals

- Total sales lift of all performance and brand activity

- Total sales lift of all performance or brand activity

MMMs are most suitable here for advertisers with substantial spends across a diverse set of paid and non-paid channels. They allow you to forecast marginal gains, simulate budget scenarios based on incremental contributions across activities, and understand external effects on the business (e.g. macroeconomics, seasonality, competitor activity). Geo experiments can also complement MMMs by measuring aggregate activities across multiple channels.

Tactical goals

- Brand or conversions lift of individual channels

- Brand or conversions lift of individual tactics

- Conversion lift for existing prospects and customers

Media campaign managers typically use in-platform experiments (e.g. YouTube brand lift studies) or first party data audiences to validate and optimise channel-level activity. While these experiments don’t account for other channels’ contributions, they’re useful for optimising tactics such as targeting and creatives.

Consistent testing across quarters can stabilise understanding of incremental uplift levels. When combined with strategic-level measurement, these insights can inform cross-channel budget reallocations.

In-flight Goals

- Bid towards high value impression levers or customer paths that predict brand or conversion lift incrementality.

- Test different levers or bidding methods (LTV vs CPA etc.) can be tested using experiments listed above.

Identifying truly incremental signals at the impression level is akin to fishing needles from a haystack. While there are now more studies around the causal effects of ad attention on brand lift, performance campaigns present much higher entropy given the wider distance between an ad impression and the point of conversion.

That said, some data-driven attribution solutions (e.g. Google’s) claim to be able to identify incremental conversion paths and automate bidding decisions. In addition, Meta announced in Aug 2024 that incremental conversions will be incorporated into its attribution models in the near future.

Incrementality experiments as ground truths for calibration

A marketer can make precisely inaccurate decisions.

Relying solely on last-click attribution (or any single measurement method) in a multi-channel world virtually guarantees this. Precision requires context grounded in reality to achieve accuracy, and incrementality experiments provide that context.

When experiments cover a significant portion of media spend, they start to become a reliable source for validating and/or calibrating other measurement methods (Figure I).

Figure I – Incrementality experiments as ground truth for calibrating MMM and DDA

Calibration extends the domain of incrementality in several ways:

Enhancing MMM accuracy by integrating experiment results – Modern MMMs, such as Meta’s Robyn and Google’s Meridien, allow analysts to integrate data from incrementality experiments or lower-fidelity sources like industry benchmarks to improve the accuracy of their outputs.

Optimising campaign tactics that show lower incrementality – While creative A/B testing is the most common use of experiments, marketers can optimise other levers (from targeting to value-based signals) to improve a channel’s overall incrementality.

Applying incrementality multipliers to tCPA and tROAS bids – Campaign managers can use incrementality multipliers to set relative incremental Cost Per Acquisition (iCPA) or incremental Return on Ad Spend (iROAS) bids across channels. However, this approach often leads to aggressive KPIs that “subtract” organic conversions from total conversion counts. Caution should be exercised to avoid amplifying the flawed hierarchy of last-click attribution and/or severely destabilising spend and conversion volumes.

Growth stages, channel diversity and budget scale

For advertisers with smaller budgets (<$5M annually),lower channel diversity, and a primarily digital focus, DDA and incrementality experiments generally provide sufficient measurement coverage.

However, for larger advertisers with offline and complex owned, earned, promotional programmes, MMMs and a well-structured incrementality program become necessary to achieve sufficient measurement coverage and establish causality (see Figure J).

Figure J – Coverage of channels by measurement methods

Bringing it all together

Change can be difficult, but it’s an inevitable requirement for marketers looking to evolve their measurement practices and maximise marketing effectiveness and efficiency in the privacy-first digital ecosystem.

Here are our 6 steps for marketers looking to build and operationalise a modern measurement framework:

1. Define objectives – Align on business objectives with key stakeholders. Where necessary, break down business KPIs into short and long term marketing metrics to guide subsequent steps.

2. Strengthen data foundations – Review and address gaps in event capture and management tools. Fortify the path to conversion with durable first party data whenever possible.

3. Tailor measurement framework – Design a measurement framework that incorporates the right combination of methodologies to answer business questions and address optimisation needs.

4. Create a learning agenda – Create a structured short and long term learning agenda. Set the business on a course to translate hypotheses into high-conviction strategies and/or tactical best practices.

5. Calibrate for incrementality – Where applicable, synthesise findings from multiple methods using incrementality as the guiding principle.

6. Bring everyone along – Foster alignment and update processes to operationalise your new measurement framework, involving everyone from marketing strategists and campaign managers to data operations teams and analysts.

Congratulations, you now know more about the core concepts of modern marketing measurement than most people in the industry!

If you found this guide useful, check out our other blog posts or subscribe to our YouTube channel for more good stuff.

Last updated: 26 August, 2024